Chapter 10. Closer Than They Appear: Drawing Lessons from Development Processes for Museum Technology, Exhibitions, and Theatrical Productions

- Max Evjen

- David McKenzie

Introduction

This chapter is an outgrowth of two presentations given at Museum Computer Network 2018.

Max Evjen’s Ignite talk “Jazz Hands Out!: Why #Musetech Should Be Like Theatre”1 argued that we in museum technology should reject the fail fast mentality borrowed from software firms, but rather look to theatre as a model for taking projects slowly while still iterating based on audience feedback during development. David McKenzie’s session on prototyping sprints examined the promises and limitations of a tech-firm-derived fail-fast process for creating museum exhibition components.

Unlike technology companies, museums don’t have venture capitalists pouring millions into organizations so they can continually “break things and see what happens.” Yet, many of us in the museum field face pressures—sometimes from ourselves—to adopt this fast-fail mentality from the tech world for our own technology projects and even for creating exhibitions. A fast-fail mentality does have the advantage of trying many ideas and learning from failure and iteration. Yet, such processes are unrealistic and, we argue, undesirable for the museum world beyond certain limited uses. For many reasons, production processes in the museum world—both for exhibitions and even for technology—follow a waterfall format and require a great deal more deliberation. Rather than rejecting this model in favor of the fast-fail model of technology companies, we argue that museum professionals should look to another, more-adjacent field, theatrical production, for how to bring more iteration and audience responsiveness into our projects while not losing the virtues of our longer, deliberative production processes. At the same time, we also argue that theatre professionals can learn from museum professionals in incorporating more continual feedback throughout the production process.

In this piece, we first discuss a tech-firm-derived process—prototyping sprints—that Ford’s Theatre used with success, up to a point. Then we discuss how museums can learn from theatrical production processes for development of both exhibitions and museum technology, while comparing and critiquing all three processes and suggesting practices that each can borrow from the other(s), rather than scuttling our processes in favor of the latest from Silicon Valley. The primary focus of critique will be on how we can better create end products around the needs of constituents by involving them in the process, while not losing strengths found in each process. We also compare stages of creating theatrical productions, museum exhibitions, and museum technology.

Limitations of Fast-Fail Sprinting: An Experiment at Ford’s Theatre

In recent years, museum professionals have borrowed some fast-fail techniques from the technology world, with some limited but helpful applicability. Prototyping sprints are one such technique. In late 2017 and early 2018, McKenzie and his colleagues at Ford’s Theatre embarked on a series of six week-long prototyping sprints to find ways to engage students in the site’s encyclopedic, text-heavy core exhibition. Ford’s Theatre used a five-day sprint process that began with an ideation session on day 1 that produced–ending with an agreement on an idea to test. Days 2 and 3 involved building the prototype. Day 4 included testing of the prototype on the floor, with a debrief on the fifth day. This technique has found some use in museum spaces, most notably by Shelley Bernstein and Sarah Devine at the Brooklyn Museum and by Bernstein at the Barnes Collection.2

Evaluator and facilitator Kate Haley Goldman, following the direction set by Ford’s Director of Education and Interpretation Sarah Jencks, adapted the sprinting technique from methods Google and other technology companies use to find quick and dirty solutions to specific problems.3

This experience demonstrated promises and pitfalls of a tech-firm-derived fail-fast paradigm. While it proved overall worthwhile—it was helpful for learning quickly about ideas that may or may not work and, as a side benefit, increased staff cohesion—it did not work for developing an idea beyond initial concept stage. During the six designated sprint weeks, Ford’s Theatre staff members were able to try and discard ideas that, in many cases, had been discussed but not acted upon for years.

This process also yielded an end product that Ford’s Theatre could use—a set of historical figure cards and flip doors to help students connect with individuals from the era interpreted. After finding success with this idea during the fourth sprint week, the team created and quickly tested new iterations during the fifth and sixth weeks. This was when the limited applicability of the process became clear. There was only so much iteration that could take place in a short time frame. Actual design, development, and installation is following a more traditional waterfall process, more akin to exhibition and theatre production. However, because of the experience, Ford’s staff is also wary of building permanently printed flip doors. Instead, the end product will be a set of flip doors with windows, allowing for continual evaluation of content and resultant iteration.

Comparison of Different Processes

So, if we want to take some of the good from fast-fail approaches—quick audience feedback—while realizing the technique’s limited applicability, what are we to do? As museum professionals, one direction we can look is to theater. Before diving into what we can learn from our arts and culture world colleagues, below we share a table comparing the very basic steps in Museum Exhibition, Museum Technology, and Theatrical Production processes—realizing that these vary across institutions and projects.

| Development Stages | Museum Exhibition | Museum Technology | Theatrical Production |

|---|---|---|---|

| Strategy | Interpretive Planning: setting messages and takeaways. May include audience feedback. | Digital Strategy: setting direction for digital efforts. May include audience | Performance selection: devising, season/show selection. May include audience feedback. |

| Requirements | Concept/Schematic Design: Preliminary design approaches, nailing down the big picture. Still room to change course. Maybe some degree of audience feedback. | Requirements: Assessing the technical needs, organizing digital resources, possible purchasing digital resources. Still room to change course. | Production Meetings: Establishing big picture design concepts. Table Read. Surround Events may include audience feedback. |

| Design | Design Development: Filling in some details, narrowing down script, still room for tweaks. Likely little or no audience feedback. | Preliminary Design: Paper prototyping, audience feedback is essential at this point. | Rehearsals, filling and narrowing down script and blocking, still room for tweaks. Likely little or no audience feedback, except in the case of commercial theatre workshops, which rely heavily on audience feedback. |

| Development | Final Design: Filling in all details, finalizing the script. Little room for tweaks except of details. Likely little or no audience feedback. | Development: Filling in details, finalizing script/expression. This may include iteration of develop/test>develop/test. | Tech Rehearsal: Lining up technical aspects of production with cues in script. Little or no audience feedback. Iterative process. |

| Testing | Production Documentation: Translating final design documents into construction drawings. Little room for tweaks except for value engineering, practical considerations. No audience feedback. | Testing: Audience feedback is incorporated, final adjustments made prior to launch. | Previews/Dress Rehearsal: Show presented in front of audience to gauge audience interest and experience to make any final changes. Audience feedback is essential. |

| Implementation | Exhibition opening: Little room for change/some room for change. | Implementation: Product launch, little room for change. | Opening: Little room for change, only practical considerations. |

| Evaluation | Summative Evaluation: Audience feedback. Little room for tweaks; most often, large-scale changes expensive, impractical. | Summative Evaluation: Audience feedback. Little room for tweaks; most often, large-scale changes expensive, impractical. | Summative Evaluation: opportunity - not often seen as essential to Production as it is in Museum Exhibitions or Museum Technology. |

Theatrical Production Models

Strategy: Theatrical production begins either with a script that is selected to be produced, a choreography that is set on a dance troupe, or a devised work that is created from some prompt (including themes, sites, or other inspirations). At this point, audiences who are familiar with a production company may be enlisted to provide feedback on what works are to be produced, but often the decision to produce a theatrical production lies with the artistic team.

Requirements: In the case of a company selecting a script to be produced, once the cast and designers have been selected, there are production meetings wherein the director meets with the designers (lights, sound, sets, costumes, projection, musical director if the production is a musical) to discuss their vision for the show. A table read is scheduled where cast, directors, designers, and stage managers sit around a table and read the script aloud. That typically denotes the beginning of rehearsals for the actors and stage managers. During this time some productions may conduct surround events to market the production by building word of mouth. These may include scene readings for special events, or previews of musical numbers. Audience feedback is gathered at events like these, and that feedback can inform the notes for the remainder of rehearsals.

Design: Rehearsals typically take place in rehearsal rooms outside of the theater in which the production will happen. In the case of commercial theatre productions, shows are not just opened, they must go through a workshop (a performance with scaled down production value [lights, sound, sets, etc.] that costs significantly less than a full production so they can test the show and get audience feedback.

Development: When the actors get in the theater it is typically after all design elements (set, lighting, sound, costumes, projections) “loaded in” to the theater. Then all the design elements are matched up with the cues from the actor’s performance. This is called a technical rehearsal (during “tech week”) during which actors must make repeated entries and exits, and speak specific lines from scenes so the technical elements can all be matched up into a series of sequential cues.

Testing: After the long days of tech rehearsals, there may be previews, shows before opening when adjustments can be made to the production (often based on audience feedback, in addition to Director’s preference), and when critics may be invited so they can write reviews before the “opening night.”

Implementation: On opening night, the responsibility for the production rests with the Stage Manager, and few, only minor, practical adjustments to the production are made during the course of a production.

Evaluation: Summative evaluation is rarely considered for theatrical production, except in the case of “post-mortems” (production meetings occurring after the show is closed to consider what worked and what did not).

Issues and Critiques of Theatrical Production Models

In the long history of theatrical production, there has always been consideration for what might be appealing to audiences, or what audiences may enjoy different types of productions.4 However, there is not the same attention given to goals (or outcomes) as there is in exhibition development, where experience goals, learning goals, and affect goals are created in advance of the development of the exhibitions. Many times, performing arts organizations will just create a production irrespective of a specific target audience, especially beyond people who traditionally attend performing arts. Both museums’ and performing arts organizations’ largest visitor base are old and white. If they don’t consider appealing to non-traditional audiences they both run the risk of becoming irrelevant to most people.5 6 How might targets help productions reach non-traditional audiences? How might goals that are shown to be reached through evaluation speak to the need for funding future productions?

Exhibition Development Models

Strategy: Exhibition development ideally begins with high-level strategic planning—often, but not always, interpretive planning. This sets the direction for where the exhibitions or museum technology projects will ultimately focus, and where they will not. Often in the past, the vision for exhibitions at this stage largely came from curators. More recently, though, audience feedback—often as a formative evaluation involving focus groups and surveys—has come into the picture at this stage. Although things are changing (as discussed below), this is often the last moment where audience members are involved in creating an exhibition.

Requirements, Design, Development, and Testing: The exhibition development process—largely built upon architectural design processes—proceeds from big picture concepts to filling in the details. At each stage, designers present conceptual drawings and models to the rest of the project team. Sometimes audience members will be involved, but more often will not.

Implementation and Evaluation: By the time of opening and product launch, museum exhibitions are largely set. Even when institutions undertake summative evaluation—again, often through surveys, observations, and focus groups—changes are often small and around the edges, rather than major, even if major issues are discovered. Unless an institution puts significant money into the budget for post-opening changes, more often, audience feedback is chalked up to lessons learned for future projects.

Museum Technology Model

Strategy: Similar to an exhibition, often the initial idea for a museum technology product comes from internal staff; quite often, museum technology projects are supplements to exhibitions. There may be audience feedback in conceptualizing an idea, but the concept itself is often driven by institutional objectives.

Requirements, Design, Development, Testing: Like with exhibition design, these stages involve filling in the details of the project. Museum technology projects involve considerable iteration at later stages, in part due to the nature of digital technology—where a minimum viable product can be built, then added onto—whereas exhibition projects, due to the need to have every detail in place before the exhibition can be built, become less iterative at later stages. In other words, there is no minimum viable product for a building, and it is difficult to find a similar analogy for an exhibition.7 As this process proceeds, unlike what happens with exhibitions, audience members often get more involved in testing functionality, although not always the content or messaging—particularly before launch. For example, web testing often involves asking potential users to complete a task, rather than gauge their interest in the content of the website.

Implementation and Evaluation: In theory, unlike with an exhibition, a museum technology product is changeable after launch. Yet, often, wholesale changes are near impossible, as the infrastructure is set. Evaluation often shows tweaks that can be made. Often, if a museum technology product fails, the museum is left with little choice but to decide between continuing to maintain it or declare it a failure and sunset it—something museums are often loathe to do.

Issues and Critiques of Exhibition Development and Museum Technology Development Models

Both of these models have generally served museums well. They reflect the deliberative nature of many institutions—entities that cannot simply break things and keep trying. They result in vetted, accurate, trustworthy end products that reflect and reinforce the trust that survey after survey shows the U.S. public places in museums. An experience with deep, thoughtful content requires time to develop, like a theatrical production does. These processes, finely developed over time, suit their institutions well and, we argue, require tweaking rather than wholesale change.

What Different Models Can Learn From One Other

The main tweak we suggest involves audience feedback and continual iteration, often missing from exhibition development and even some museum technology processes. This has, all too often, resulted in exhibitions and technology products that receive rave reviews from fellow experts and fail to engage their target audiences. Yet, we are often then stuck with the results for years, if not decades. Unlike a theatrical production, an exhibition that fails to engage its audiences often nonetheless is not closed. How can we make these semi-permanent end products more responsive to audience needs and interests throughout the process? Perhaps theatre can help us with this question, while museums can also offer ideas that could improve the theatrical production process. Below we identify five prompts about each process to elaborate further on potential learnings.

Focus on entertaining the audience

In Evjen’s Ignite talk he asserted that theatrical organizations understand that if audiences don’t show up, a production gets cancelled. Museums don’t operate with that same kind of urgency, but they should. According to Colleen Dilenschneider8 for all arts & cultural organizations “Once adjusted for population growth, flat attendance is declining attendance.” It’s a safe bet that many museums would love to have the attendance numbers and press of Hamilton (with a Broadway production in New York City, additional productions in Chicago and Puerto Rico, and a national tour.). To be fair, many theatre companies would, too, but there is a reason that some Broadway shows have extensive runs: they provide entertaining experiences that are relevant to audiences. Museums need to remember “entertainment value motivates visitation while education value tends to justify a visit.”9

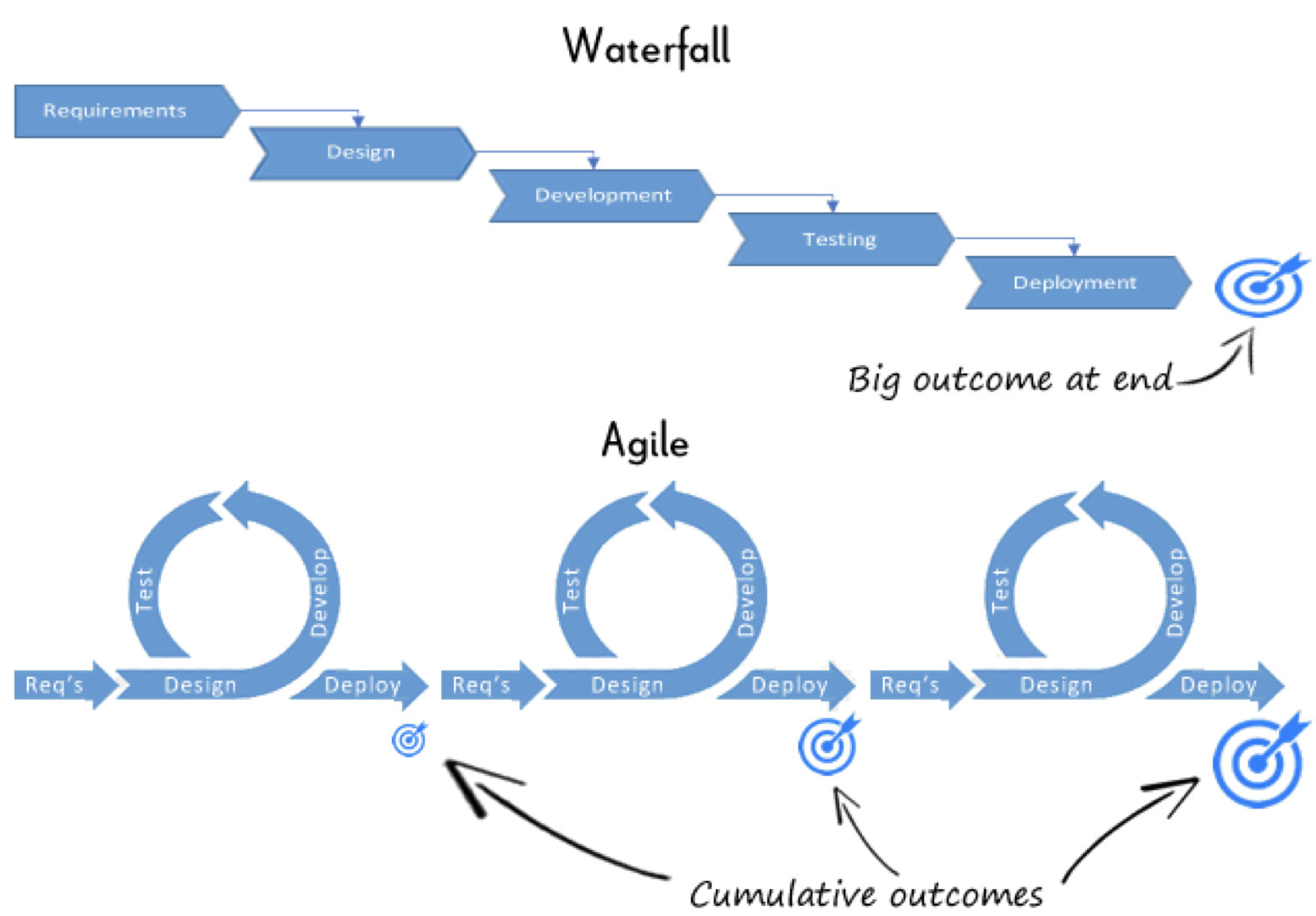

Iteration/Waterfall models

Evjen’s Ignite talk was a call to reject “fail fast” mentality and for museums to learn from theatrical production processes that iterate, just not in a sprint-like fashion. McKenzie’s presentation discussed the limited applicability of design sprints in exhibition design—a lesson that could also be applied to technology projects (originally, the project began as one to create an app or mobile-friendly website for onsite use). Chad Weinard10 states “Grant funding, fixed requirements, timelines and resources all mean that digital projects (in museums) are almost always waterfall processes” instead of agile processes.

Rather than fight the waterfall process, we should embrace it, learning from theatre how to involve audiences during the process and allowing room for iteration based on feedback. After all, museums and theatres are mostly non-profit arts organizations vying for limited resources. For example, even after experimenting with prototyping, Ford’s Theatre is currently exploring a more iterative and audience-informed, yet still waterfall, exhibition design process for future exhibitions.

Continual feedback

Some museums have found in recent years that there is room for audience feedback throughout exhibition design processes. Eastern State Penitentiary Historic Site in Philadelphia, for example, tested prototypes of varying levels of fidelity with on-site audiences during the entire development process for its Prisons Today exhibition. The exhibition team rolled out different means of showcasing specific concepts throughout the project.

Kajsa Hartig recently raised in a tweet and a Medium post the following question: what if we got audience feedback even earlier in our processes—even before we’ve decided whether we are doing an exhibition, public program, or event?11 Following principles of human-centered design, she argues that we should think about designing experiences, and use audience feedback to figure out what kind of experience makes the most sense for the particular audience at the particular institution with the particular content. It may be worth exploring which audiences are invited to give feedback, as well. Different audiences have different needs and interests and will provide different kinds of feedback. Inviting in audience feedback as part of a continual feedback model, or in early decision making, makes it even more essential that museums are seeking diverse, non-binary gender specific, deliberately anti-racist, anti-ableist, representation.

Additionally, both museum technology and exhibition development processes largely get audience feedback on functionality, rather than content, as the process rolls on. What if we were testing for understanding and engagement, as well? There are some examples of movement in this area. For instance, Evjen has been working on a minimum viable product of augmented reality (AR) at the Michigan State University Museum, that included evaluation of functionality, as well as understanding and engagement. Theatrical rehearsals and previews as part of theatrical production, are inherently iterative processes that often include continual feedback during development, and commercial theatre workshops rely on audience feedback, but after a production opens consistency is the goal, except in a few special circumstances like improvisation.

Ability to tweak after launch

There is an emerging field of summative evaluation of theatrical productions, particularly in those that involve science communication.12 Theatrical productions are constrained by the typical arrangement of the Stage Manager taking control of production upon opening. It would require new thinking to realize just how evaluation results could inform productions after opening, when the Director is no longer in control of the production, and after reviews of the production have been released. Museum exhibitions do not suffer from this particular process constraint, and museums are very accustomed to informing exhibitions at various stages including front-end evaluation, formative evaluation, remedial evaluation, and summative evaluation.13 Perhaps theatrical organizations could learn more about how all types of evaluation could be used for the success of productions, or from museums who build in budget lines for iteration after launch.

Move from cabinets of curiosity to focus on audience experience

The performing arts have a history as ritual and immersive environments while museums carry the weight of a background filled with pillaging and plundering, and cabinets of curiosities.14 How might museums consider a new role as that of ritual and immersive environment, without ignoring their more unattractive pasts? Following the lead of thinkers like Hartig and Andrea Jones, what does it mean for us to develop experiences incorporating artifacts, rather than exhibitions of artifacts?15 16

Toward a Better Model

In his Ignite talk, Evjen recommended that museum professionals reach out to theatre professionals in their communities, rather than try to imitate tech companies. Museum professionals can try and learn something about their processes that may inform better museum work from professionals who work in similar institutions, rather than following the lead of companies whose end goal is selling products. Theatre professionals may also benefit from museum processes, like setting interpretive goals, identifying target audiences, and articulating evaluation plans, and they should reach out to museum professionals in their community to find out how those can be valuable for production.

There is no silver bullet, but we argue that museums and performing arts organizations need to choose an approach that works best for their own organization. As a general rule, we argue that we shouldn’t adopt tech firm fast-fail ideas wholesale and throw out the waterfall processes that museum and theatre professionals have honed over the years. Rather, we should learn from one another and, most importantly, adapt our processes to have audience members involved at all stages of the process—and be willing to iterate based on their feedback. Feedback-based iteration, borrowed from theatre, rather than fast-fail, will help push our organizations forward and make exhibitions, programs, and digital experiences more relevant to different audiences.

Notes

- Max Evjen, “Jazz Hands Out!: Why #Musetech Should Be Like Theatre,” Ignite Talk, Museum Computer Network Conference, Denver, CO, 2018. https://bit.ly/2N0Mb2C (accessed February 19, 2019). ↩

- Shelly Bernstein, “Fighting the Three Dots of User Expectation,” BKM TECH (blog), May 6, 2015, https://www.brooklynmuseum.org/community/blogosphere/2015/05/06/fighting-the-three-dots-of-user-expectation/ (accessed February 19, 2019); also Shelly, Bernstein, “Learning from Agile Fails,” BKM TECH (blog), May 5, 2015, https://www.brooklynmuseum.org/community/blogosphere/2015/05/05/learning-from-agile-fails/ (accessed February 19, 2019); and Sara Devine, “Agile by Design.” BKM TECH (blog), May 14, 2015. https://www.brooklynmuseum.org/community/blogosphere/2015/05/14/agile-by-design (accessed February 19, 2019). ↩

- Jake Knapp, John Zeratsky, Braden Kowitz, and Dan Bittner. Sprint: How to Solve Big Problems and Test New Ideas in Just Five Days (New York; Prince Frederick, MD: Simon & Schuster Audio, 2016); also Kate Haley Goldman and David McKenzie, “Adding Historical Voices to the Ford’s Theatre Site: Prototyping Sprint 1, Round 2,” Ford’s Theatre Blog (blog), January 29, 2018, https://www.fords.org/blog/post/adding-historical-voices-to-the-ford-s-theatre-site-prototyping-sprint-1-round-2/ (accessed February 19, 2019); also Kate Haley Goldman and David McKenzie, “Following a Historical Figure, Again: Prototyping Sprint 3,” Ford’s Theatre Blog (blog), September 12, 2018, https://www.fords.org/blog/post/following-a-historical-figure-again-prototyping-sprint-3/ (accessed February 19, 2019); also Kate Haley Goldman and David McKenzie, “How Relevant Is Too Relevant? Connecting Past & Present at the Ford’s Museum: Prototyping Spring 2 - Round 1 (Part 1),” Ford’s Theatre Blog (blog), April 13, 2018, https://www.fords.org/blog/post/how-relevant-is-too-relevant-connecting-past-present-at-the-ford-s-museum-prototyping-sprint-2-round-1-part-1/ (accessed February 19, 2019); also Kate Haley Goldman and David McKenzie, “Learning What Visitors Want: A Shifted Plan and Prototyping in the Museum,” Ford’s Theatre Blog (blog), November 28, 2017, https://www.fords.org/blog/post/learning-what-visitors-want-a-shifted-plan-and-prototyping-in-the-museum/ (accessed February 19, 2019); and Kate Haley Goldman and David McKenzie, “Prototyping Historical Figure Cards at Ford’s Theatre: Sprint 2, Round 2,” Ford’s Theatre Blog (blog), April 20, 2018, https://www.fords.org/blog/post/prototyping-historical-figure-cards-at-ford-s-theatre-sprint-2-round-2/ (accessed February 19, 2019). ↩

- Jessica Bathurst and Tobie S. Stein, Performing Arts Management: A Handbook of Professional Practices (New York City: Allworth Press, 2010). ↩

- Colleen Dilenschneider, “Cultural Organizations Are Still Not Reaching New Audiences (DATA),” Know Your Own Bone, November 9, 2017, https://www.colleendilen.com/2017/11/08/cultural-organizations-still-not-reaching-new-audiences-data/ (accessed February 19, 2019). ↩

- James Chung, Susan Wilkening, and Sally Johnstone, Museums & Society 2034: Trends and Potential Futures (Washington, DC: Center for the Future of Museums, American Association of Museums, 2008). ↩

- McKenzie thanks Chris Evans and Margaret Middleton for this insight during a #DrinkingAboutMuseums conversation. ↩

- Colleen Dilenschneider, “Cultural Organizations Are Still Not Reaching New Audiences (DATA),” Know Your Own Bone, November 8, 2017, https://www.colleendilen.com/2017/11/08/cultural-organizations-still-not-reaching-new-audiences-data/ (accessed February 19, 2019). ↩

- Colleen Dilenschneider, “Which Is More Important for Cultural Organizations: Being Educationalor Being Entertaining? (DATA),” Know Your Own Bone, December 18, 2018, https://www.colleendilen.com/2016/03/16/which-is-more-important-for-cultural-organizations-being-educational-or-being-entertaining-data/ (accessed February 19, 2019). ↩

- Chad Weinard, “‘Maintaining’ the Future of Museums: A slideshow on innovation and infrastructure,” Medium (blog), December 12, 2018, https://medium.com/@caw_/maintaining-the-future-of-museums-d72631f6905b (accessed February 19, 2019). ↩

- Kasja Hartig, “Finding a Neutral Starting Point for Producing Museum Experiences,” Medium (blog), January 27, 2019, https://medium.com/@kajsahartig/finding-a-neutral-starting-point-for-producing-museum-experiences-3ee93c5ada12 (accessed February 19, 2019). ↩

- Amy Lessen, Ama Rogan, and Michael, J. Blum, “Science Communication Through Art: Objectives,Challenges, and Outcomes,” In Trends in Ecology & Evolution, Vol. 31, No. 9, (September 2016), pp. 657 - 660. ↩

- Randi Korn, “New Directions in Evaluation,” In Pat Villeneuve, ed., From Periphery to Center: Art Museum Education in the 21st Century (Reston, VA: National Art Education Association, 2007). ↩

- John Cotton Dana, “The Gloom of the Museum,” In Gail Anderson, ed., Reinventing the Museum: Historical and Contemporary Perspectives on the Paradigm Shift, 2004. ↩

- Hartig, Kajsa, “Finding a Neutral Starting Point for Producing Museum Experiences,” Medium (blog), January 27, 2019, https://medium.com/@kajsahartig/finding-a-neutral-starting-point-for-producing-museum-experiences-3ee93c5ada12 (accessed February 19, 2019). ↩

- Andrea Jones, “7 Reasons Museums Should Share More Experiences, Less Information,” Peak Experience Lab (blog), March 26, 2017, http://www.peakexperiencelab.com/blog/2017/3/24/7-reasons-why-museums-should-share-more-experiences-less-information (accessed February 19, 2019). ↩

Bibliography

- Bathurst, Jessica, and Tobie S. Stein. Performing Arts Management: A Handbook of Professional Practices. New York City: Allworth Press, 2010.

- Bernstein, Shelley. “Fighting the Three Dots of User Expectation.” BKM TECH (blog), May 6, 2015. https://www.brooklynmuseum.org/community/blogosphere/2015/05/06/fighting-the-three-dots-of-user-expectation/ (accessed February 19, 2019).

- Bernstein, Shelley. “Learning from Agile Fails.” BKM TECH (blog), May 5, 2015. https://www.brooklynmuseum.org/community/blogosphere/2015/05/05/learning-from-agile-fails/ (accessed February 19, 2019).

- Chung, James, Susan Wilkening, and Sally Johnstone. Museums & Society 2034: Trends and Potential Futures. Washington, DC: Center for the Future of Museums, American Association of Museums, 2008.

- Dana, John Cotton. “The Gloom of the Museum.” In Gail Anderson, ed., Reinventing the Museum: Historical and Contemporary Perspectives on the Paradigm Shift, 2004.

- Devine, Sara. “Agile by Design.” BKM TECH (blog), May 14, 2015. https://www.brooklynmuseum.org/community/blogosphere/2015/05/14/agile-by-design (accessed February 19, 2019).

- Dilenschneider, Colleen. “Cultural Organizations Are Still Not Reaching New Audiences (DATA).” Know Your Own Bone. November 8, 2017, https://www.colleendilen.com/2017/11/08/cultural-organizations-still-not-reaching-new-audiences-data/ (accessed February 19, 2019).

- Dilenschneider, Colleen. “Which Is More Important for Cultural Organizations: Being Educational or Being Entertaining? (DATA).” Know Your Own Bone. December 18, 2018. https://www.colleendilen.com/2016/03/16/which-is-more-important-for-cultural-organizations-being-educational-or-being-entertaining-data/ (accessed February 19, 2019).

- Evjen, Max. “Jazz Hands Out!: Why #Musetech Should Be Like Theatre.” Ignite Talk. Museum Computer Network Conference. Denver, CO, 2018. (https://bit.ly/2N0Mb2C)

- Haley Goldman, Kate, and David McKenzie. “Adding Historical Voices to the Ford’s Theatre Site: Prototyping Sprint 1, Round 2.” Ford’s Theatre Blog (blog), January 29, 2018. https://www.fords.org/blog/post/adding-historical-voices-to-the-ford-s-theatre-site-prototyping-sprint-1-round-2/ (accessed February 19, 2019).

- Haley Goldman, Kate, and David McKenzie. “Following a Historical Figure, Again: Prototyping Sprint 3.” Ford’s Theatre Blog (blog), September 12, 2018. https://www.fords.org/blog/post/following-a-historical-figure-again-prototyping-sprint-3/ (accessed February 19, 2019).

- Haley Goldman, Kate, and David McKenzie. “How Relevant Is Too Relevant? Connecting Past & Present at the Ford’s Museum: Prototyping Spring 2 - Round 1 (Part 1).” Ford’s Theatre Blog (blog), April 13, 2018. https://www.fords.org/blog/post/how-relevant-is-too-relevant-connecting-past-present-at-the-ford-s-museum-prototyping-sprint-2-round-1-part-1/ (accessed February 19, 2019).

- Haley Goldman, Kate, and David McKenzie. “Learning What Visitors Want: A Shifted Plan and Prototyping in the Museum.” Ford’s Theatre Blog (blog), November 28, 2017. https://www.fords.org/blog/post/learning-what-visitors-want-a-shifted-plan-and-prototyping-in-the-museum/ (accessed February 19, 2019).

- Haley Goldman, Kate, and David McKenzie. “Prototyping Historical Figure Cards at Ford’s Theatre: Sprint 2, Round 2.” Ford’s Theatre Blog (blog), April 20, 2018. https://www.fords.org/blog/post/prototyping-historical-figure-cards-at-ford-s-theatre-sprint-2-round-2/ (accessed February 19, 2019).

- Haley Goldman, Kate, and David McKenzie. “Take-Aways While Connecting Past & Present in the Ford’s Museum: Prototyping Sprint 2 - Round 1 (Part 2).” Ford’s Theatre Blog (blog), April 13, 2018. https://www.fords.org/blog/post/take-aways-while-connecting-past-present-in-the-ford-s-museum-prototyping-sprint-2-round-1-part-2/ (accessed February 19, 2019).

- Haley Goldman, Kate Goldman and David McKenzie. “Taking It To the Streets: Prototyping Sprint 1, Round 1.” Ford’s Theatre Blog (blog), December 20, 2017. https://www.fords.org/blog/post/taking-it-to-the-streets-prototyping-sprint-1-round-1/ (accessed February 19, 2019).

- Hartig, Kajsa. “Finding a Neutral Starting Point for Producing Museum Experiences.” Medium (blog), January 27, 2019. https://medium.com/@kajsahartig/finding-a-neutral-starting-point-for-producing-museum-experiences-3ee93c5ada12 (accessed February 19, 2019).

- Jones, Andrea. “7 Reasons Museums Should Share More Experiences, Less Information.” Peak Experience Lab (blog), March 26, 2017. http://www.peakexperiencelab.com/blog/2017/3/24/7-reasons-why-museums-should-share-more-experiences-less-information (accessed February 19, 2019).

- Knapp, Jake, John Zeratsky, Braden Kowitz, and Dan Bittner. Sprint: How to Solve Big Problems and Test New Ideas in Just Five Days. New York; Prince Frederick, MD: Simon & Schuster Audio, 2016.

- Korn, Randi, “New Directions in Evaluation.” In Pat Villeneuve, ed., From Periphery to Center: Art Museum Education in the 21st Century. Reston, VA: National Art Education Association, 2007.

- Lesen, Amy, Ama Rogan, and Michael, J. Blum. “Science Communication Through Art: Objectives,Challenges, and Outcomes.” In Trends in Ecology & Evolution, Vol. 31, No. 9, (September 2016), pp. 657 - 660.

- Low, Theodore. “What is a Museum?” In Gail Anderson, ed. Reinventing the Museum: Historical and Contemporary Perspectives on the Paradigm Shift, 2006.

- Rozik, Eli. “The Roots of Theatre: Rethinking Ritual and Other Theories of Origin.” University of Iowa Press, 2002.

- Weinard, Chad. “‘Maintaining’ the Future of Museums: A slideshow on innovation and infrastructure.” Medium (blog). December 12, 2018. https://medium.com/@caw_/maintaining-the-future-of-museums-d72631f6905b (accessed February 19, 2019).